🤣 hahaha 🤪Yes, that's right, the M1 Pro MAX is so power efficient, it actually produces power instead of consuming it. With the Apple Thunderbolt to Edison dongle, you can plug in your blender, hair dryer, or charge your Tesla.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

M1 Max Chip May Have More Raw GPU Performance Than a PlayStation 5

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Things will get really interesting if M1 Max proves to have good ETH hash rates at lower power consumption (like >50Mh @ <50W).They’re in demand for crypto mining, not gaming.

This 8x RTX 3090 machine makes $128,000 per year:

10 x GeForce RTX 3090 cards liquid + mineral oil cooled crypto rigs

10 x NVIDIA GeForce RTX 3090 graphics cards + 3 x 1600W PSUs, mineral oil cooled = craziest mining rig in the world EVER.www.tweaktown.com

Cinema 4D All the adobe suite, any 3D modelling... The power won't go to waste. Games are secondary or tertiary on a Mac, always was, and that won't change overnight.and yet Apple dropped the ball by not having one game announcement from an AAA publisher.

Games on the new mac are like Final Cut or Logic pro on the M1 iPad... we have sooooo much power... for nothing.

I'm willing to bet a hundie that if someone did publish a quality game for both platforms. The M1 Max would give the PS5 or Xbox X a good run for it's money.Such an illiterate logic 🤣.

Apple’s marketing and their fanbase never fails to impress me, especially on MacRumors…

10.4TF doesn’t mean that it can actually use 10.4TF if it’s power limited to +-60W and probably even bandwidth starved, (lack superior L1 and L2 caches, don’t use unified L3 chance, lacks a geometry engine, doesn’t support techniques such as VRS and storage APIs…)

The AMD Radeon V is actually 14.9 TF and the AMD VEGA 64 is 13.4 TF, and both are slower than the PS5 and Series X, significantly slower in fact!

Don’t fall for the fake marketing people. By no mean these MacBooks are slow or anything, but if you actually believe that it’s faster than a PS5 you have to seek help…

For consoles to consistently hit their maximum TFlops performance they actually uses these kind of heatsink.

View attachment 1871105

With what test? Obviously it's cherry picked. If they think anyone will believe a M1 Max is better than a RTX 3080 overall they're smoking crack.Dave Lee (Dave2D) said they were comparing it to the RTX 3080 Razer

No way. There's a lot more to it and the m1 max simply isn't designed for it. Not saying it'd be bad but there is simply no way apple made a SOC that can hang with the consoles yet.I'm willing to bet a hundie that if someone did publish a quality game for both platforms. The M1 Max would give the PS5 or Xbox X a good run for it's money.

I don't exactly think you're wrong but it's a bit rich to call out this article for illiterate logic then make equally illiterate claims. saying it's going to be power limited to 60 watts and therefore cant hit the 10.4TF is dumb when they're estimating it will hit 10.4TF at 60 watts. And saying it'll be bandwidth starved when its bandwidth is only slightly lower than the ps5 but has double the bus width makes no sense. then finally you wrap this up with "look how big the console heatsink is, therefore impossible" you do realize that's the whole point of the hype, is the efficiency is really good, I could go grab the heatsink from a 2010 Xeon and call the ps5's CPU power impossible.Such an illiterate logic 🤣.

Apple’s marketing and their fanbase never fails to impress me, especially on MacRumors…

10.4TF doesn’t mean that it can actually use 10.4TF if it’s power limited to +-60W and probably even bandwidth starved, (lack superior L1 and L2 caches, don’t use unified L3 chance, lacks a geometry engine, doesn’t support techniques such as VRS and storage APIs…)

The AMD Radeon V is actually 14.9 TF and the AMD VEGA 64 is 13.4 TF, and both are slower than the PS5 and Series X, significantly slower in fact!

Don’t fall for the fake marketing people. By no mean these MacBooks are slow or anything, but if you actually believe that it’s faster than a PS5 you have to seek help…

For consoles to consistently hit their maximum TFlops performance they actually uses these kind of heatsink.

View attachment 1871105

like you're right that TF doesn't equal performance and given apple doesn't care about gaming yeah, it won't beat the PS5 in gaming cos it likely isn't tailored for it, but man was that a terrible way to justify that. Also not sure how you know the cache and microarchitecture specs of the chips but id be keen to see where you found that if you wouldn't mind sharing

only the mobile, not the desktop version, though yeah it aint exactly beating that in gaming most likely.With what test? Obviously it's cherry picked. If they think anyone will believe a M1 Max is better than a RTX 3080 overall they're smoking crack.

Plenty of people enjoy AAA games. Gaming is deserving of apple staffing up more to have a team of people working with developers and more. gotta remember the days of John Carmack appearing with Steve Jobs was years ago.Apple would be foolish to pursue AAA games.

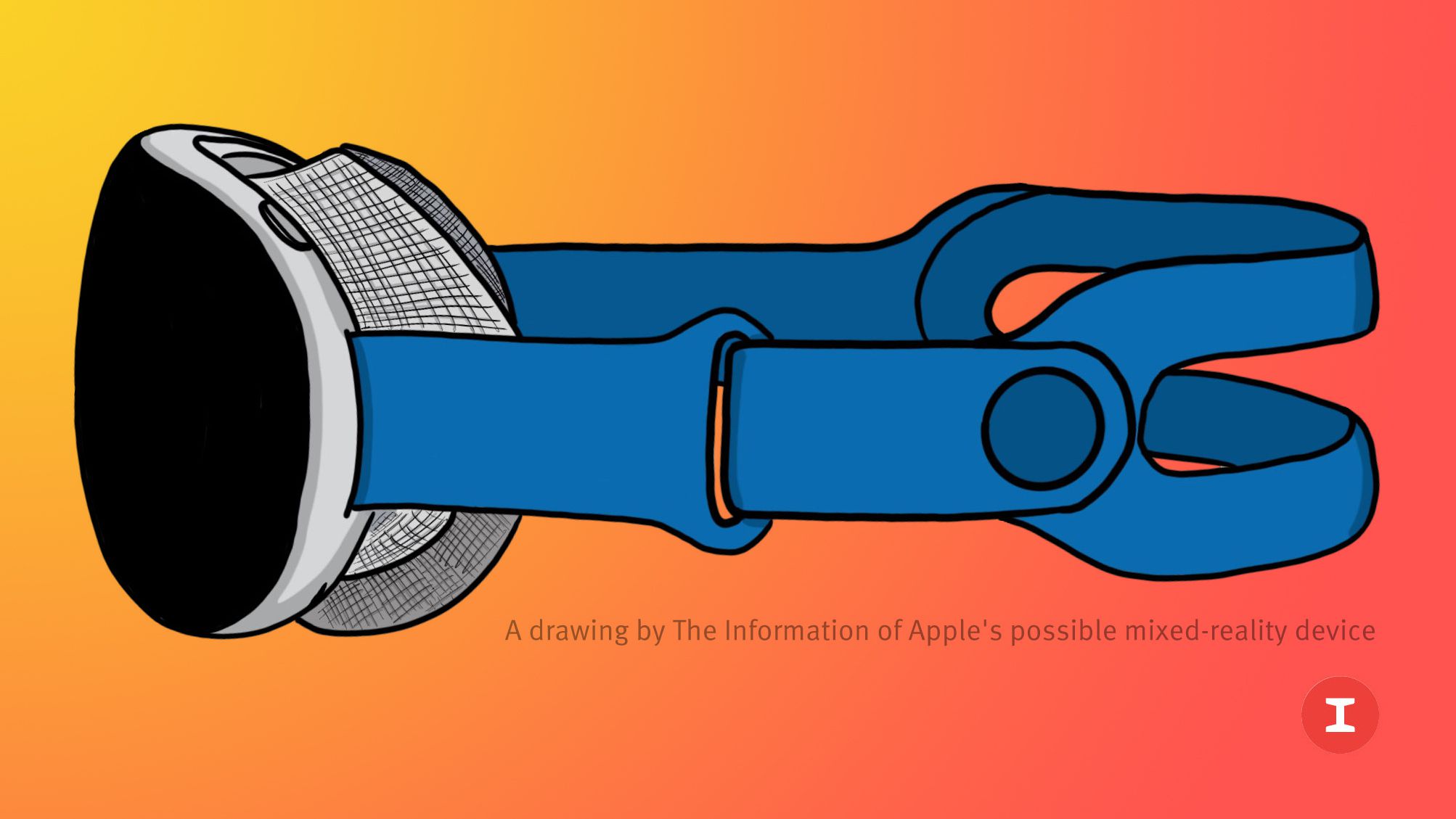

Even though they are huge, they still have limited resources. They are investing those resources to create low power/high performance chips for one reason: to power stereoscopic rendering of two 8k displays while processing sensor input. Where, you ask, is that? It's right here:

Kuo: Mass Production on Apple's AR/VR Headset May Be Delayed Until End of 2022

Apple may not begin production on its upcoming AR/VR headset until the end of the fourth quarter of 2022, Apple analyst Ming-Chi Kuo said today in a note sent out to investors. That would likely result in the device launching late in 2022 or in early 2023.www.macrumors.com

This is the foundation for a much, much larger market that AAA games. It doesn't matter how it does it (maybe without raytracing or CUDA compatibility, who cares?), so long as it gets the job done. That is a good business decision, and Apple is right for pursuing it.

would be great to have them get Macs, Apple TV's and iOS devices with a range of games. not just Minon Run on an iPad for 7 year olds.

Didn't Apple say during WWDC '21 that Apple's Metal team worked closely with game developers in optimising their games using Xcode toolings? It sure looks to me like Apple is not ignoring game developers.Gaming is deserving of apple staffing up more to have a team of people working with developers and more. gotta remember the days of John Carmack appearing with Steve Jobs was years ago.

I think Apple is playing the long game. They know their largest install base of Mac users do not have Macs that have decent GPU capabilities to play games. Those that do are too expensive. The transition to AS changes that. Now all AS Macs have those capabilities. In 3-5 years when most of install based Macs are AS, it will likely tempt game developers to start releasing games for macOS, iOS, iPad OS and Apple TV OS.

Sounds like Apple should purchase a game developer. Since M$ got Bungie back in the day, and now Bethesda. It maybe time to fix the problem themselves.It’s not just about ARM it’s also the way the operating system functions, its libraries, it’s APIs, it’s kernel, the way unified memory works, metal vs OpenGL/direct3d/Vulkan.

If Apple wants to woo developers then it needs to dedicate staff resources to announce unreal engine 5 support (for example) and then announce it in the keynote for example. Microsoft put in the work back in the 90s and 00s with direct3d. Apple needs to do the same in an robust, unleashed manner.

With what test? Obviously it's cherry picked. If they think anyone will believe a M1 Max is better than a RTX 3080 overall they're smoking crack.

That's not what they said. They compared against the RTX 3080 available in a comparably sized laptop (Razer)....ergo, the GPU alone is clocked at "only" 105W instead of the 165W in larger laptops and the 200W+ that it is capable of in a desktop unit. Then we need to add the CPU wattage of of the Ryzen 9.

For the entire MBP with M1 Max to be pushing similar performance at only 60W combined is an incredible feat. If you think that the Razer with RTX3080 will be better overall, then we know who is smoking crack....

1) The MBP can perform the same unplugged, the Razer drops significantly when unplugged, both in CPU and GPU performance.

2) Memory bandwidth on the MBP is significantly higher 400Gb/s vs 68Gb/s

3) Up top 64Gb memory available for GPU/ CPU for the MBP vs 16Gb Max (GPU) and 16Gb Max (CPU) in the Razer.

4) Battery life - Razer is 7hrs 46m at idle (10.4W) with screen at 0%, backlight off. It can go to 5hrs 45m (15W) with text editing in word and light internet use with backlight at 40%. LOL! MBP 17hrs and 21hrs dependent on 14" vs 16".

5) 7.2Gb/s SSD speed on the MBP.

As a mobile workstation, I know which one will be the better performer. Not once did Apple mention gaming....their entire presentation was around comparing mobile workstation performance.

Edit: Anandtech has a preliminary write up: https://www.anandtech.com/show/1701...m1-max-giant-new-socs-with-allout-performance

Last edited:

The PS5 is sold at a loss.

Sony Says $499 PS5 No Longer Sells at a Loss

Sony has also managed to secure enough chips to hit its 22 million console sales target this fiscal year.

Now imagine what chip will be in the next Mac Pro.

This does sort of clarify that the pricing of the next Mac Pro may not be all that low.

Question: why are you so obsessed with this 60W thing? In other comments here you keep carrying on about how "The GODS have spoken, and no one can ever compute 10 TF without blasting out 200 W of power..." or some such unfounded, unjustified nonsense.Such an illiterate logic 🤣.

Apple’s marketing and their fanbase never fails to impress me, especially on MacRumors…

10.4TF doesn’t mean that it can actually use 10.4TF if it’s power limited to +-60W and probably even bandwidth starved

Do you not remember a few years ago when AMD was shipping GPUs that could fry eggs, while NVIDIA was humming along at much lower TDP? Do you honestly believe that "because AMD makes hot chips, therefore every chip ever made in the universe must be just as hot, or it doesn't exist and is just a marketing ploy?"

How can you claim that other chip manufacturers cannot _ever_ make a more efficient chip than a competitor?

Case study: The M1 GPU takes 313s to render a certain scene in blender (the standard bmw27 scene). The same scene takes 132s to render on a GTX 780 Ti. The 780 Ti is a 6 TFLOP machine, that means the M1 is pulling the equivalent of ~2.5 TFLOPS, which is just shy of the theoretical limit of 2.6 TFLOPS. And it has a ~12 W power package for the GPU+RAM.

M1 conclusion: ~200 GFLOPS per Watt.

Look at the M1 Max, which has ~60 W to play with:

60 W * 200 GFLOPS/W = 12 TFLOPS.

And the claim here is that it only gets 10.4 TFLOPS.

Furthermore, the 780 Ti itself manages only like 24 GFLOPS/W, which means that the 320 W RTX 3080 should only ever be able to churn out 8 TFLOPS _max_ according to your flawed argument. And if you seriously think the 3080 is capped at 8 TFLOPS, then I guess I give up. (For the record it can get 19 TFLOPS at 200 W, which you also claim is impossible.)

Maybe the M1 Max is not this efficient, and the perf/power equations aren't exactly linear, but these M1 chips certainly aren't the wild, crazy, conspiratorial, earth shattering, universe destroying, physics denying phenomenon you are trying to make it out to be. Holy Jeeziz Bajeeziz, Batman.

Instead of AppleTV+, Apple should have purchased a stake in major game studios or purchased it or started its own. Best case do both AppleTV+ and AppleArcade+, I wonder which investment would have profited faster.I bought a $4,000 2019 MBP with a Vega 20. It plays Elder Scrolls Online WORSE than a friend's 2020 with integrated graphics. It hitches every few seconds like it's texture thrashing. It doesn't matter how much "power" they put in the chip if the industry doesn't optimize games for it. Bethesda has already said they won't support Apple silicon, and I imagine that a lot of other top-tier companies feel the same way. I will never look to do anything other than casual gaming on future Macs, and won't waste the money on upgrading them to try to do so again. Unless a miracle occurs, it's just never going to happen.

dave2d said that this is between 3080 100W from razer and the 3080 160W from MSI monster laptopWith what test? Obviously it's cherry picked. If they think anyone will believe a M1 Max is better than a RTX 3080 overall they're smoking crack.

When I had my Power Computing Power Center 120 with PowerPC 604/604e processor, I had loads of power but MacOS 8.5 was slow and the cooperative multitasking was holding it back. I booted BeOS and it showed how fast an operating system could be on the same hardware.Chicken and the egg situation. Hardware had to exist first, before games would be made.

When Mac OS X finally arrived, Apple didn't want it running on any Mac clones. It ran sufficiently well on a dual CPU G4 at 867 MHz.

Apple always seemed to be out of sync with their hardware and software. Now, we're at a similar moment.

Unless you count making a large chunk of their users happy, which they clearly don’t.

Making users happy doesn't always mean more $$$. You have to invest a significant amount of money into helping developers and provide dedicated teams to get AAA games launched.

I’m on the verge of switching to Windows entirely if I can sort out a couple of wrinkles in my workflow, and if I do that I’ll probably replace my aging XS Max with an Android device and not replace my 2017 iPad Pro at all, so making users unhappy can certainly cost them money.Making users happy doesn't always mean more $$$. You have to invest a significant amount of money into helping developers and provide dedicated teams to get AAA games launched.

Also, there are a huge, huge number of gamers, from casual to hardcore, who would simply never consider a Mac because of the sorry state of Mac gaming. There’s market share to be gained (and existing customers to be retained), but they don’t want it for whatever reason.

I’ve always found it puzzling that Apple has no interest in that market, given how important gaming is to their iOS money machine.

But that’s just it, right? On iOS they get a portion of the price of every game sold, so they go out of their way to make iOS an excellent gaming platform.

On the Mac they don’t get to take a commission on anything sold on Steam or the Epic games store or direct from a publisher, so they consider Mac gaming an afterthought. If they ever lock the Mac down the way iOS is and force all app installs through the Mac App Store, watch how quickly they start courting game developers.

Which, as I’ve said, is all fine. Apple doesn’t owe me anything, I’m just finding it harder and harder to justify owning two expensive machines (one for work, one for play) instead of one. I like simplicity and dislike clutter.

Plus, Apple has always had a certain arrogance about it — “here is what you should want, whether you’re bright enough to realize it or not, so shut up and be happy with it.”

That attitude really began grating on me with the 2016 MacBook Pro refresh, and their years-long insistence on trying to slap silly band-aids (keyboard condoms, in point of fact) onto an obviously broken keyboard (and thermal) design.

Like, okay, you screw up, that’s fine. But if you keep screwing up for years and telling me the problems are all in my head (I’m using it wrong, perhaps) … it just leaves a bad taste in one’s mouth.

What is a geometry Engine? Dont buy marketing, every GPU since 1981 is a geometry engine. A geometry engine is primarily a CPU with high precision floating point operations, Matrix multiplication , A four point vector , Clipping and mapping instructions for coordinates.Such an illiterate logic 🤣.

Apple’s marketing and their fanbase never fails to impress me, especially on MacRumors…

10.4TF doesn’t mean that it can actually use 10.4TF if it’s power limited to +-60W and probably even bandwidth starved, (lack superior L1 and L2 caches, don’t use unified L3 chance, lacks a geometry engine, doesn’t support techniques such as VRS and storage APIs…)

The AMD Radeon V is actually 14.9 TF and the AMD VEGA 64 is 13.4 TF, and both are slower than the PS5 and Series X, significantly slower in fact!

Don’t fall for the fake marketing people. By no mean these MacBooks are slow or anything, but if you actually believe that it’s faster than a PS5 you have to seek help…

For consoles to consistently hit their maximum TFlops performance they actually uses these kind of heatsink.

View attachment 1871105

Fundamentally PS5 SOC is a AMD Zen2 CPU with 8 cores at 3.5 GHZ, M1Pro and Max are already 10 Cores and Much higher GPU's , AMD does one more thing add SRAM directly on the chip eliminating the need for expensive cache, Apple did the same. It is conceivable that this chip will actually beat or be at par with PS5 SOC.

Although sustained or not is yet to be seen at those thermal envelopes, but these chips are 5nm meanwhile PS5 SOC is a 7nm Chip. So there would be a reduction in thermal output. I dont thinkl its a stretch to think these can deliver sustained. And if not we would need a larger Heatsink.

Intel said the same before M1 came out, but when they did the benchmarks they realised it is they who were smoking the aforementioned contraband.With what test? Obviously it's cherry picked. If they think anyone will believe a M1 Max is better than a RTX 3080 overall they're smoking crack.

Beat the PS5 doing what?What is a geometry Engine? Dont buy marketing, every GPU since 1981 is a geometry engine. A geometry engine is primarily a CPU with high precision floating point operations, Matrix multiplication , A four point vector , Clipping and mapping instructions for coordinates.

Fundamentally PS5 SOC is a AMD Zen2 CPU with 8 cores at 3.5 GHZ, M1Pro and Max are already 10 Cores and Much higher GPU's , AMD does one more thing add SRAM directly on the chip eliminating the need for expensive cache, Apple did the same. It is conceivable that this chip will actually beat or be at par with PS5 SOC.

Although sustained or not is yet to be seen at those thermal envelopes, but these chips are 5nm meanwhile PS5 SOC is a 7nm Chip. So there would be a reduction in thermal output. I dont thinkl its a stretch to think these can deliver sustained. And if not we would need a larger Heatsink.

Yeah every GPU has a geometry engine, in this case Sony isn't using AMD's they are using their own (it is why the PS5 doesn't actually support Mesh Shaders like the XSX does).

The last thing you want to do is: buy a maxed out M1 MAX MBP, and try to play AAA games on parallel desktop.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.